Embracing Innovation: My Wind River Internship Journey

I like to compare Wind River Internships to stepping into a world where every day is filled with thrilling technologies, cutting-edge tools, and a team of incredible individuals who share your passion for innovation. That's exactly what I experienced during my DevOps internship at Wind River. As a final-year student at the National University of Costa Rica, I was eager to dive into the professional world where I could learn and get hands-on experience in Terraform, AWS, Docker, Kubernetes, Gitlab, and so much more. Wind River delivered, not only meeting my expectations but exceeding them in every way.

In this blog, I want to take you on a journey through my time at Wind River. I'll share the challenges I faced, as well as the lessons I learned in a specific task that I developed in my internship learning plan: the GitLab Benchmarking Performance.

GitLab: The DevSecOps Platform

GitLab is an open-source code repository and collaborative software development platform for large DevOps and DevSecOps projects. It can integrate security into your DevSecOps lifecycle, you can visualize and optimize your entire DevOps lifecycle with platform-wide analytics within the same system where you do your work.

Optimizing Performance with GitLab Benchmarking

Performance benchmarking plays a vital role when ensuring efficiency, effectiveness, and overall quality. During my internship at Wind River, I had the opportunity to dive into the world of performance benchmarking, particularly on GitLab. In this blog post, I will share my insights on how to run performance benchmarking on GitLab by leveraging the GitLab Performance Tool (GPT).

Understanding Performance Benchmarking:

Performance benchmarking is a process that involves evaluating and comparing the performance of a system, device, or process against established standards or competitors. By measuring and analyzing parameters such as speed, throughput, responsiveness, resource utilization, and reliability, performance benchmarking provides valuable insights into the efficiency and effectiveness of a system. It helps identify areas for improvement and allows organizations to make data-driven decisions to optimize performance.

Introducing the GitLab Performance Tool (GPT):

To facilitate performance testing of any GitLab instance, the GitLab Quality Engineering - Enablement team has developed and maintained the GitLab Performance Tool (GPT). Built upon the open-source tool k6, GPT offers a range of tests designed specifically for performance testing GitLab. It allows organizations to effectively assess the performance of their GitLab environment, ensuring optimal performance and user experience.

Running a Performance Benchmarking on GitLab:

When it comes to running a performance benchmarking on GitLab using GPT, GitLab recommends running it against a non-production instance to avoid any disruptions. A separate workstation or server with Docker installed is required for this purpose. By following the official documentation and guidelines provided by GitLab, one can set up and configure GPT to perform comprehensive performance tests on the GitLab environment. The tests may take several hours, depending on the system environment, and it is crucial to choose the quickest possible time for accurate results.

Running the tests:

To ensure accurate performance benchmarking results, GitLab provides a set of reference architectures that have been meticulously designed and tested by the GitLab Quality and Support teams in their own environments. These reference architectures serve as recommended deployments at scale, considering factors such as user activity, automation usage, mirroring, and repository/change size. Depending on your specific workflow and workload requirements, GitLab offers a range of reference architectures to choose from, each tailored to different user capacity levels.

These architectures include options for up to 1,000 users, 2,000 users, 3,000 users, 10,000 users, 50,000 users, etc. Selecting the appropriate architecture for your needs can be a crucial decision, as it determines the balance between performance and resilience. The more performant and resilient you want your GitLab environment to be, the more complex the architecture becomes.

Impact Across Wind River Teams:

The results obtained from running performance benchmarking tests using the GitLab Performance Tool (GPT) have the potential to create a significant impact on Wind River's GitLab environments. By simulating up to 50,000 users and evaluating the system's performance, teams can identify any limitations and gaps in resource allocation. This information enables them to proactively address performance issues by adding necessary resources such as CPU and memory. Implementing this tool and setting up regular performance reviews using automated pipelines would provide a systematic approach to track progress, ensure continuous improvement, and ultimately enhance the overall GitLab experience for numerous teams at Wind River.

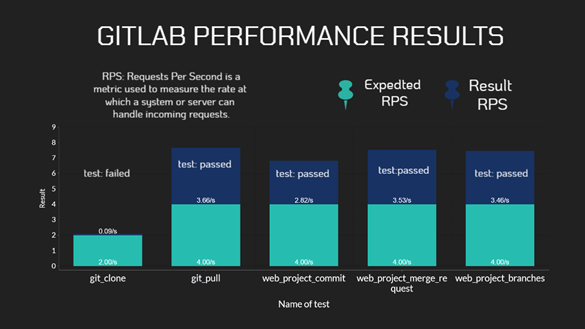

Here's a graphic that illustrates how the Data Team environment performed with the RPS test, which consist of how fast can handle request like a 'Git Clone', 'Git Pull' or a 'Merge Request'.

For example, on the specific case of 'Git Pull' which the function of that command is update with the latest change of code a repository, has to last less than 4.00/s and it took 3.66/s, so the time environment has enough resources to do this task perfectly, nevertheless, on the 'Git Clone' command, even though it took less than the expected 2.00/s, it took more than it should and the test failed, meaning that the environment should scale up its resources.

About the author

Maria Angelica Robles Azofeifa is a DevOps Engineer at Wind River