What Is Cloud Native?

Cloud-native applications are flexible, agile, and easier for developers to update and maintain.

Cloud native is a strategy and approach to the design, construction, and operation of distributed applications running in a cloud computing environment. To meet customer demands efficiently and effectively, companies need highly scalable, flexible, and resilient applications. Cloud-native technologies provide a complete competitive advantage, because cloud-native applications are built out of microservices.

In traditional application development, all functionalities are built into one program. This can make the applications difficult to scale and maintain as they grow larger. A cloud-native approach divides the functionality of an application into smaller microservices, which are easier for developers to update and maintain. The microservices communicate with each other to accomplish the business goals, but none of these services has complete knowledge of the entire application. Cloud-native applications are inherently more agile, due to their low computing resource requirements and their ability to be deployed independently.

Cloud-Native Strategy at the Edge

At the edge, there is likely to be a multitier, or n-tier, architecture with a distributed control plane where provisioning, lifecycle management, logging, and security are performed.

The edge cloud is the site of a central regional controller that deploys and manages the various subclouds. This site runs the central unit (CU), as well as other workloads such as analytics and the near-real-time RAN intelligent controller (RIC). It could include a centralized dashboard, security management, container image registry, lifecycle management, and other functions. The centralized edge cloud is connected to the far edge by the midhaul network.

There are also edge servers and worker nodes. The edge servers are like mini data centers with lightweight control planes that manage the worker nodes, which could be located at the base station in a mobile access network or on-premises at an enterprise. The worker nodes run the application workloads, which can be anything from telco apps to operational technology–based workloads, as in factory automation or transit system management.

Where the workload is deployed depends on the latency requirements for the application. The lower the latency required (for example, 5–20 milliseconds between device and worker node), the further out toward the edge the worker node will be deployed.

As nodes are deployed further out, the requirements for a lightweight control plane and smaller physical footprint become more important. Hence the need for a cloud-native strategy.

The architecture is centered around the idea that resources should be highly elastic, required only for the minimum time possible, and assigned as needed to meet application demand and business needs. This approach allows for maximum agility in response to changing business requirements and market conditions.

Benefits of Adopting a Cloud-Native Strategy

Three Main Benefits of a Cloud-Native Approach

Increased productivity and development

Decreased costs

Increased resilience and accessibility

The biggest benefits of cloud-native environments are in the areas of deployment, operations, management, and development of applications. A cloud-native environment fosters a DevOps approach to delivering applications and services.

More specifically, cloud-native benefits include extra security and isolation from the host environment, easy version control in software configuration management, a consistent environment from development to production, and a smaller footprint. In addition, cloud native allows developers to use their preferred toolsets and enables rapid spin-up and spin-down as well as easy image updates. It facilitates portability to run on all major distributions while also eliminating environmental inconsistencies.

Edge Deployment Challenges

Along with the advantages of cloud native come some challenging issues around edge deployment:

Complexity

With multiple use cases and a heterogeneous environment, edge cloud deployments are inevitably complex, especially in operations and management. Not all companies have the resources to keep up with the fast-changing cloud-native landscape, where new open source projects are launched almost weekly.

Diversity

There is no one-size-fits-all approach for edge cloud deployments. There are very real differences between operational technology (OT) and information technology (IT) environments. Each has different latencies, bandwidth, and scale requirements, among other things. Service providers need to bridge the gap.

Cost

With potentially thousands of edge servers deployed, the hardware costs can exceed five times what it costs to deploy an application in a data center. In some cases, a worker node might require four servers to host control planes, storage, and compute, and these will require extra fans, discs, and cooling. The costs can easily become untenable.

Security and performance

Since many edge sites don’t have the physical security that a large data center would, security needs to be built into the application and infrastructure. Edge clouds must also meet telco and other critical infrastructure performance requirements.

How Can Wind River Help?

Wind River Studio provides zero-touch provisioning, automatic updates, and dynamic scaling, among other network benefits.

Edge connections, especially 5G, are poised to explode in growth. To deliver, 5G networks must offer extremely low latency, high availability, security, and flexibility. They must also rely on distributed cloud architecture to be robust, resilient, and flexible across a multi-node, geo-distributed landscape.

Wind River Studio Cloud Platform

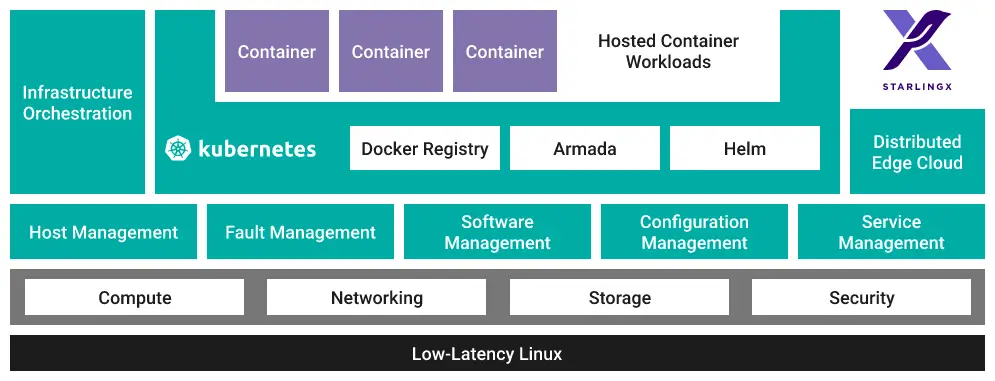

Wind River® delivers these features today with the cloud platform that is at the core of its operator capabilities. Based on the StarlingX project, Wind River Studio Cloud Platform provides a production-grade Kubernetes solution for managing edge cloud infrastructure and compiles best-in-class open source technology to deploy and manage distributed networks.

Key benefits to operator customers deploying Studio Cloud Platform include:

Controlled operational cost

Studio simplifies deployment, management, and maintenance. By offering single-pane-of-glass management with automated and hitless software updates and upgrades, your distributed cloud network becomes manageable without any need to disproportionately scale up staff or take down the network. Furthermore, integrated end-to-end security helps mitigate risk, giving you less to worry about.

Figure 1. Wind River Studio Cloud Platform

Market leadership

Zero-touch provisioning, automatic updates, and dynamic scaling are a few of the features that make Studio ideal for distributed networks. With the tools needed to easily deploy and manage a diverse distributed cloud network, Studio is the infrastructure that service providers need now to be first to market with 5G vRAN at scale.

New revenue streams

A fundamental premise of 5G is that it will enable new low-latency use cases, such as autonomous driving, robotics, and a myriad of yet-to-be-imagined services. With an out-of-the-box, optimized, ultra-low-latency, small-footprint infrastructure, Studio offers flexibility that will grow with your business.

Production-ready Kubernetes

Leverage deployment-ready open source with commercial testing, hardening, and lifecycle support and maintenance. Studio offers a fully supported open source platform for operators that you can deploy today.

New revenue streams

Build your digital lifecycle business with Studio for the distributed cloud.

- Lead in the machine economy, where your products, your customers, and your company are constantly connected and working together through one platform and a single pane of glass for everybody.

- Realize the economic advantages of applications and machines working together to compute, sense, predict, and run autonomously, often on the far edge, in the new digitally transformed world.

- Create new business models and get digital scale through one pane of glass, so that automation and machine learning can be utilized in mission-critical use cases — safely, securely, and throughout the product lifecycle.

Operational Leadership

Innovate how you operate your business on the far edge.

- Integrate deployment, operations, and services through one cloud-native platform for near-real-time, latency-free orchestration capabilities across the cloud.

- Evolve your capabilities to operate increasingly complex and business- or mission-critical networks, devices, and applications that will dominate the 5G, distributed cloud, intelligent systems world. All through a single pane of glass for all your teams.

- Expand your capabilities so that your control and your insights span from development through to operations and additive services that let you operate at digital scale.

Development

Boost the power of teams with a real-time collaborative platform in a mission-critical world.

- Collaborate through a single pane of glass in the native cloud. Apply shared expertise with digital scale for the lifecycle of your mission-critical products and services. Use machine learning and automation and put the power of digital feedback loops into everything your teams do.

- Innovate on one platform with curated applications, simulation, emulation, automation, security tools, and testing capabilities that get you scale and leverage in near real time.

- Modernize with automation, full visibility, and easy configurability. Deliver on simultaneous builds and have a pipeline for platform and another for applications on one mission-critical, cloud-native platform.

Product Management

Develop, operate, learn, and adjust your intelligent systems products and services.

- Build products and services in an iterative, mission-critical, safe, and secure manner throughout the lifecycle.

- Learn from constant digital feedback loops throughout the full lifecycle of your products. Leverage the ability to infuse automation and machine learning in ways that could change the OpEx envelope by placing data at the center of your decision processes.

- Get digital scale for your people, products, and customers as they share and learn in near real time together via a single pane of glass for the full lifecycle of your products. Add services (cybersecurity, certification) to your experiences from the start.