Wanted: Virtual Switching without Compromise

At the recent Intel Developer Forum in San Francisco, there was lots of discussion about the tradeoffs associated with various approaches to virtual switching. In this post, we’ll outline the pros and cons of the most common solutions and show that it’s possible to meet aggressive performance targets without compromising on critical system-level features.

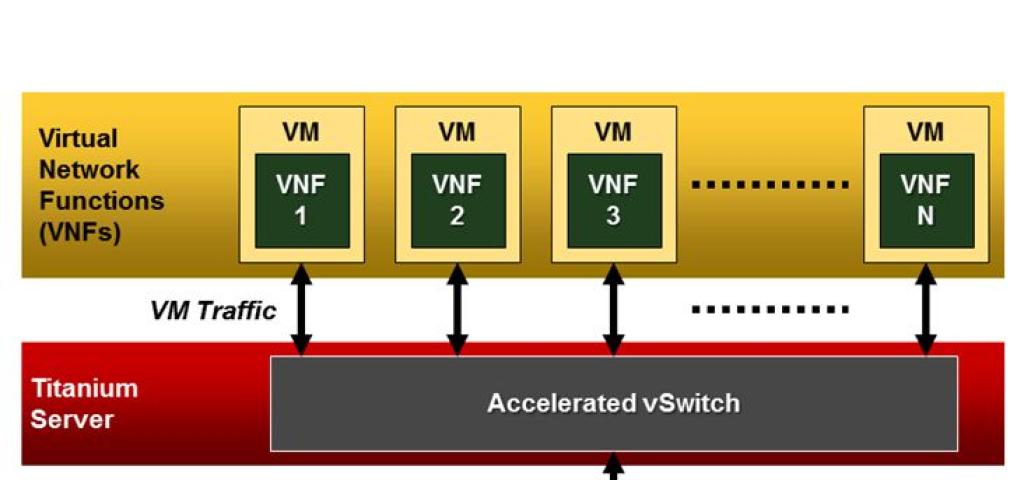

Virtual switching is a key function within data centers based on Software Defined Networking (SDN) as well as in telecom infrastructure that leverages Network Functions Virtualization (NFV). In the NFV scenario, for example, the virtual switch (vSwitch) is responsible for switching network traffic between the core network and the virtualized applications or Virtual Network Functions (VNFs) that are running in Virtual Machines (VMs). The vSwitch runs on the same server platform as the VNFs and its switching performance directly affects the number of subscribers that can be supported on a single server blade. This in turn impacts the overall operational cost-per-subscriber and has a major influence on the OPEX improvements that can be achieved by a move to NFV.

Because switching performance is such an important driver of OPEX reductions, two approaches have been developed that boost performance while compromising on functionality: PCI Pass-through and Single-Root I/O Virtualization (SR-IOV). As we’ll see, though, the functions that are dropped by these approaches turn out to be critical for Carrier Grade telecom networks. Fortunately, there is now an alternative solution that provides best-in-class performance as well as these key functions, so the compromises turn out to be unnecessary.

PCI Pass-through is the simplest approach to switching for NFV infrastructure. As explained in detail here, it allows a physical PCI Network Interface Card (NIC) on the host server to be assigned directly to a guest VM. The guest OS drivers can use the device hardware directly without relying on any driver capabilities from the host OS.

Using PCI Pass-through, you can deliver network traffic to the Virtual Network Functions (VNFs) at line rate, with a latency that is completely dependent on the physical NIC. But NICs are mapped to VMs on a 1:1 basis, with no support for the sharing of NICs between VMs, which prevents the dynamic reassignment of resources that is a key concept within NFV. Each VM requires a dedicated NIC that can’t be shared, and NICs are significantly more expensive than cores as well as being less flexible.

This white paper provides a good explanation of the concept behind Single-Root I/O Virtualization (SR-IOV). Basically, SR-IOV, which is implemented in some but not all NICs, provides a mechanism by which a single Ethernet port can appear to be multiple separate physical devices. This enables a single NIC to be shared between multiple VMs.

As in the case of PCI Pass-through, SR-IOV delivers network traffic to the Virtual Network Functions (VNFs) at line rate, typically with a latency of 50µs, which meets the requirements for NFV infrastructure. With SR-IOV, a basic level of NIC sharing is possible, but not the complete flexibility that enables fully dynamic reallocation of resources. NIC sharing also reduces net throughput, so additional (expensive) NICs are typically required to achieve system-level performance targets.

For NFV, though, the biggest limitations of PCI Pass-through and SR-IOV become apparent when we consider features that are absolute requirements for a Carrier Grade telecom networks:

- Network security is limited since the guest VMs have direct access to the network. Critical security features such as ACL and QoS protection are not supported, so there is no protection against Denial of Service (DoS) attacks.

- These approaches prevent the implementation of live VM migration, whereby VMs can be migrated from one physical core to another (which may be on a different server) with no loss of traffic or data. Only “cold migration” is possible, which typically impacts services for at least two minutes.

- Hitless software patching and upgrades are impossible, so network operators are forced to use cold migration for these functions too.

- It can take up to 4 seconds to detect link failures, which impacts required link protection capabilities.

- Service providers are limited in their ability to setup and manage VNF service chains. Normally, a chain would be set-up autonomously from the perspective of the VNF (perhaps by an external orchestrator), whereas if the VNF owns the interface (as in the case of PCI Pass-through or SR-IOV), it has to be involved in the set-up and management of the chains, which is infeasible or complex.

For service providers who are deploying NFV in their live networks, neither PCI Pass-through nor SR-IOV enable them to provide the Carrier Grade reliability that is required by telecom customers, namely six-nines (99.9999%) service uptime.

Fortunately, though, the telco-grade Accelerated vSwitch (AVS) within Wind River Titanium Server software provides a solution to this problem.

In terms of performance, AVS delivers 40x the performance of the open-source Open vSwitch (OVS) software, switching 12 million packets per second, per core (64-byte packets) on a dual-socket Intel® Xeon® Processor E5-2600 series platform (“Ivy Bridge”) running at 2.9GHz. AVS performs line-rate switching using significantly fewer processor cores than OVS, enabling the remaining cores to be utilized for running VMs that comprise revenue-generating services. (See this earlier post for more on the benefits of this high-performance virtual switching.)

At the same time, AVS provides the Carrier Grade features that are absent from the other two solutions that we’ve discussed:

- ACL and QoS protection, providing protection against DoS attacks and enabling intelligent discards in overload situations.

- Full live VM migration with less than 150ms service impact, instead of the limited “cold migration” option.

- Hitless software patching and upgrades.

- Link protection with failover in 50ms or less.

- Fully isolated NIC interfaces.

With AVS now available as part of Wind River’s NFV infrastructure platform, there’s no need to forsake critical Carrier Grade features in order to meet performance targets. AVS delivers performance that is equivalent to or better than either PCI pass-through or SR-IOV, while at the same time enabling service providers to achieve the telco-grade reliability as they progressively deploy NFV in their networks.

As always, we welcome your comments on this topic. And if you’re going to be at SDN & OpenFlow World Congress in Dusseldorf in October, please do stop by the Wind River booth to see a demonstration of our NFV infrastructure solution and talk about your requirements in this area.